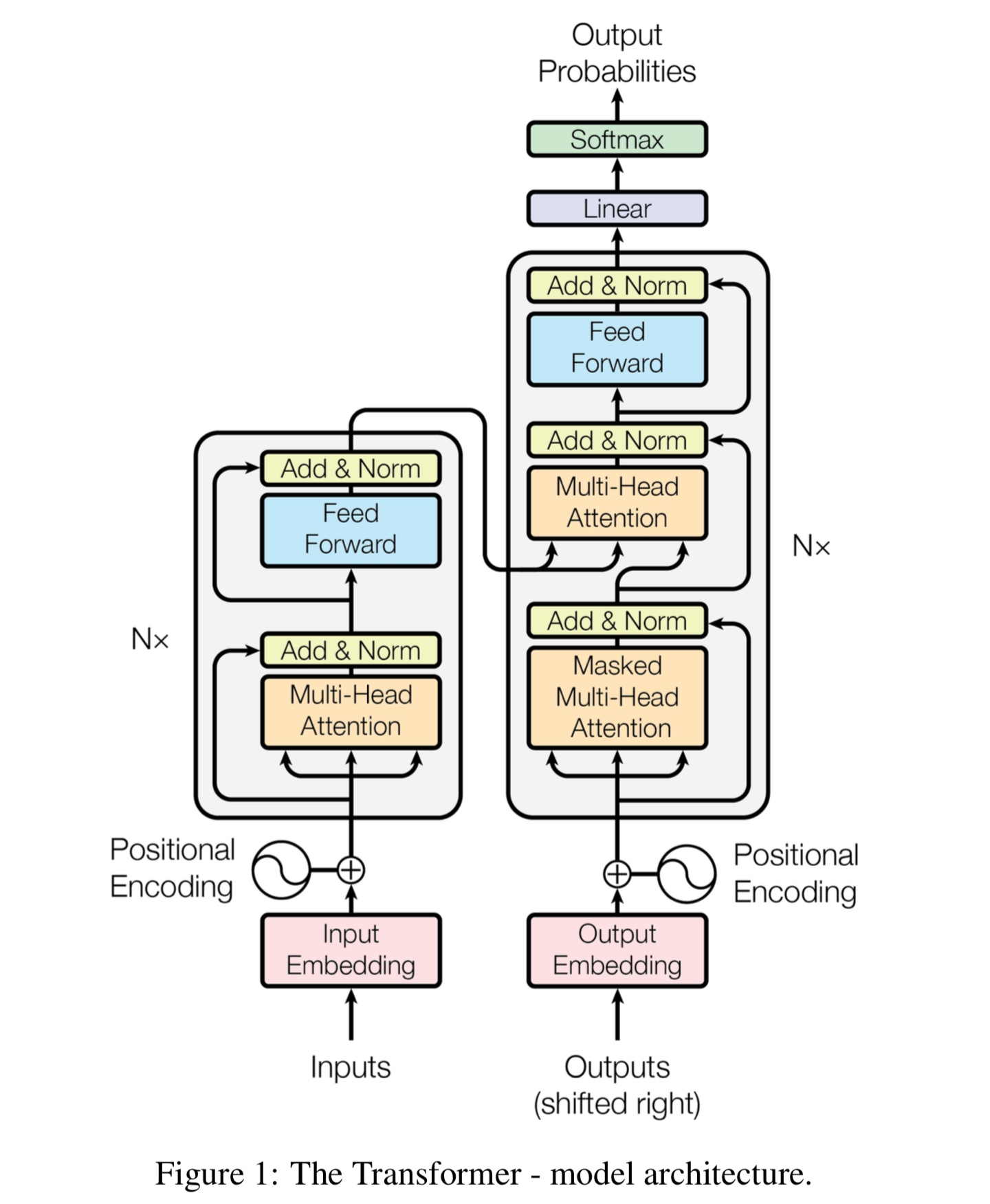

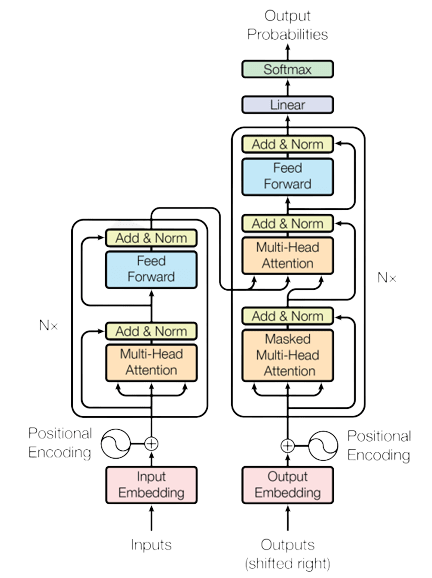

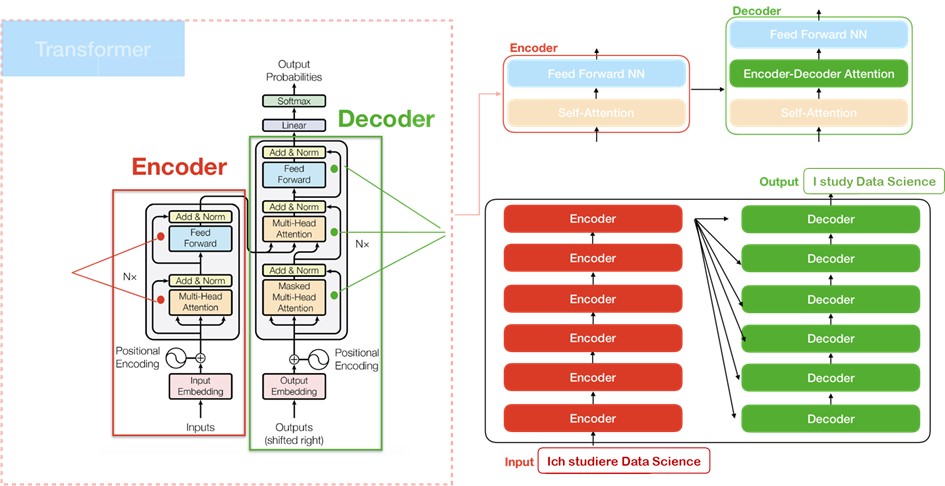

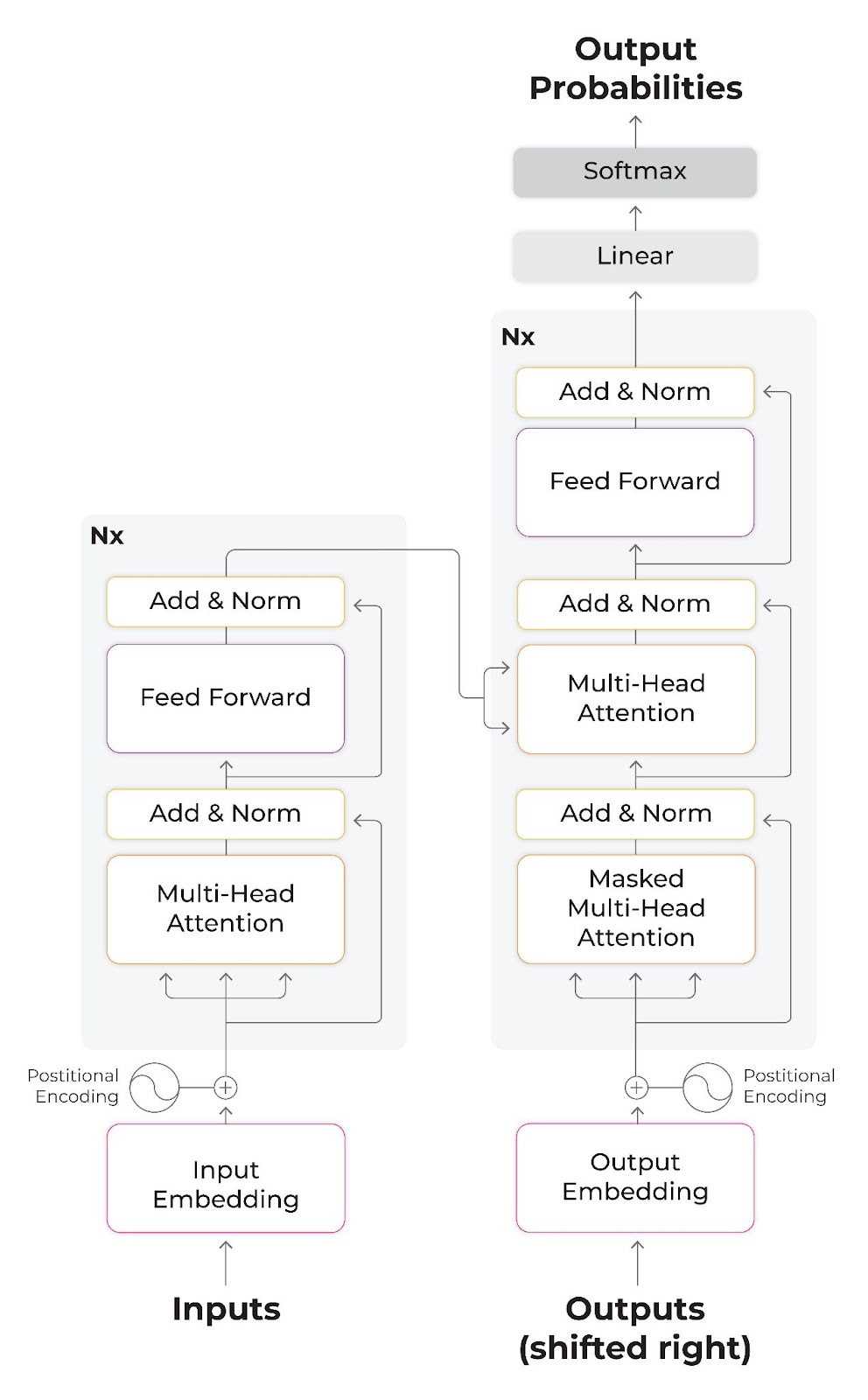

nlp - What are the inputs of encoder and decoder layers of transformer architecture? - Data Science Stack Exchange

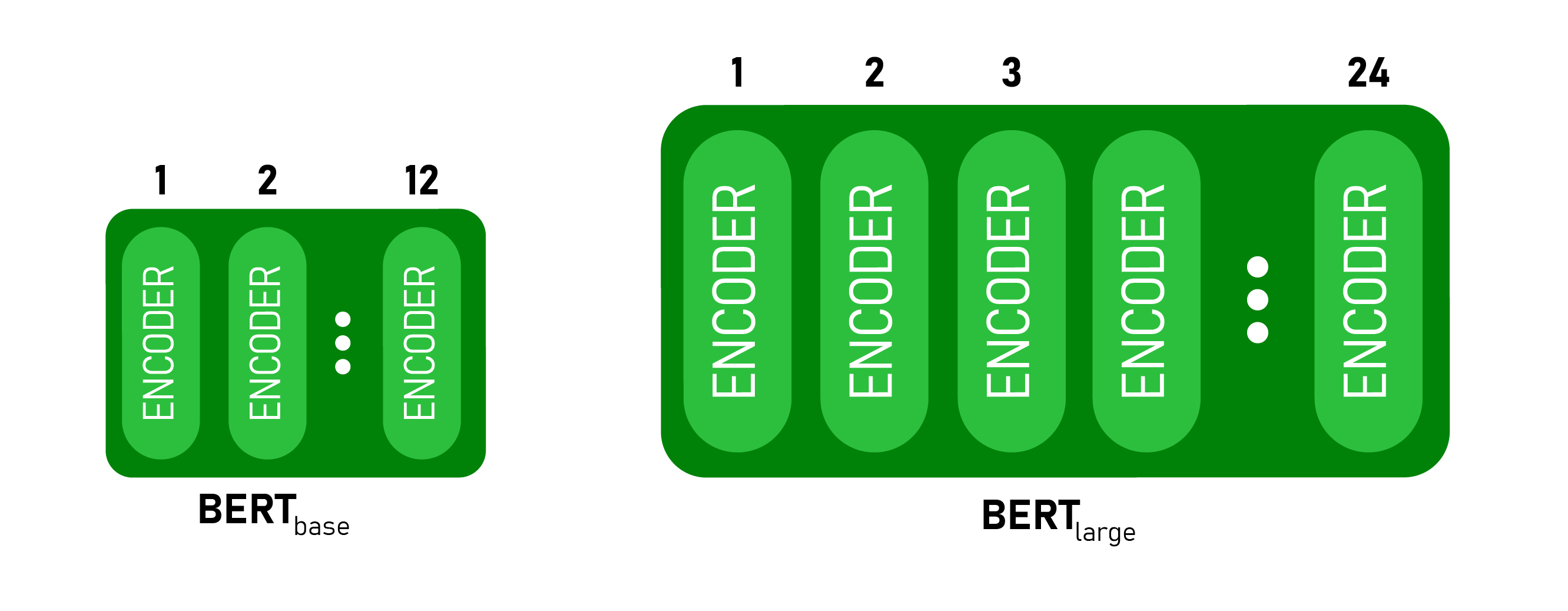

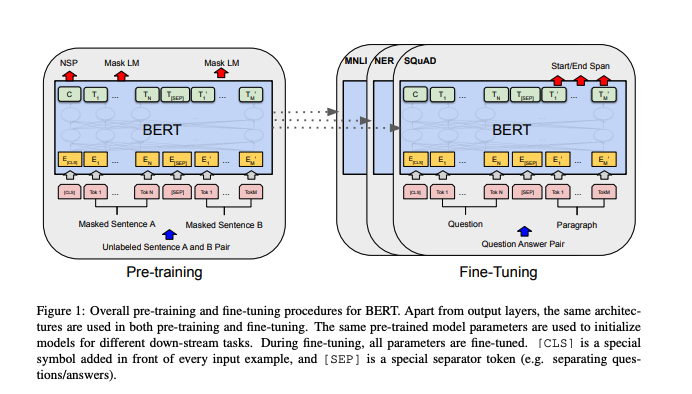

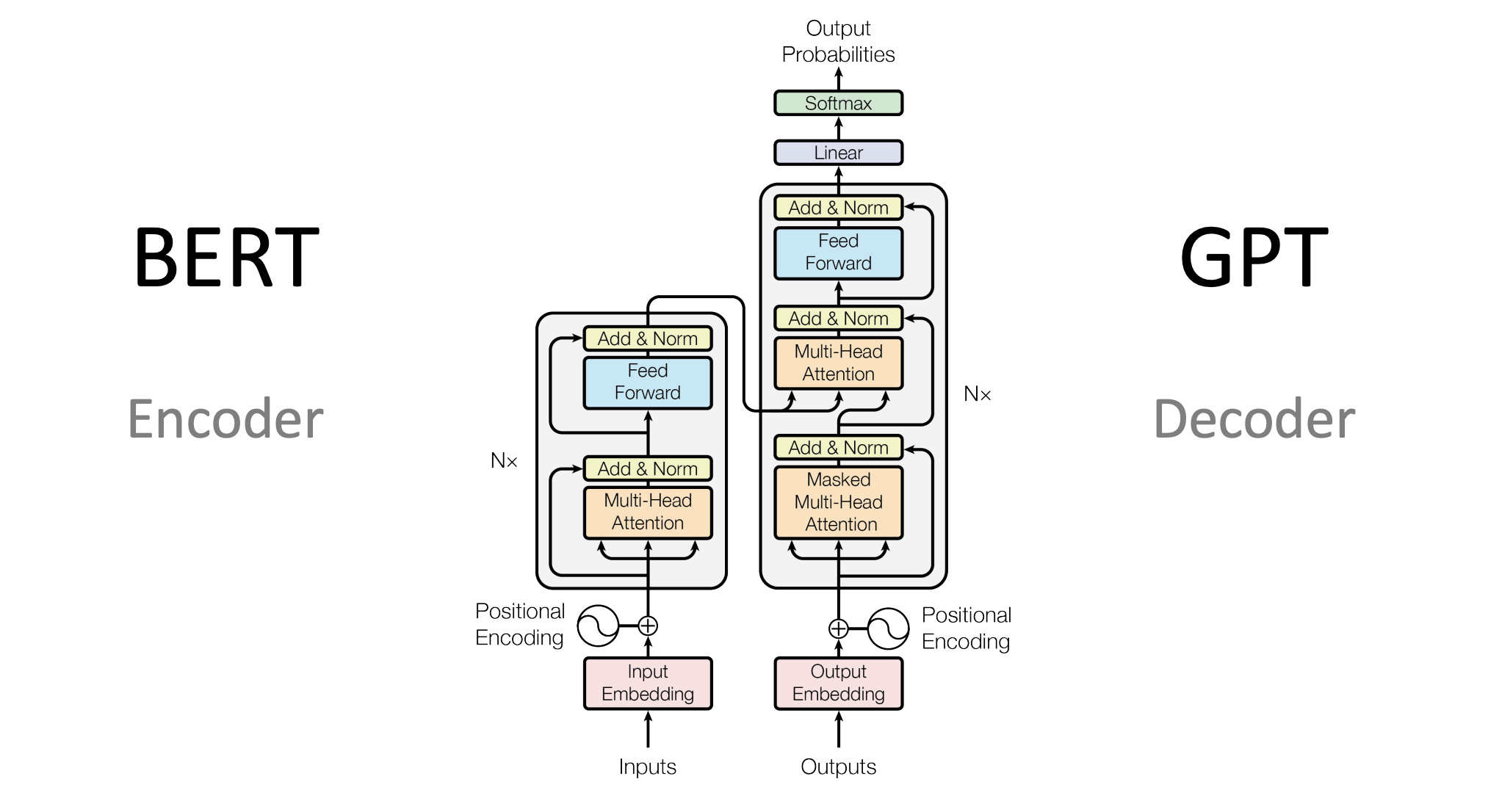

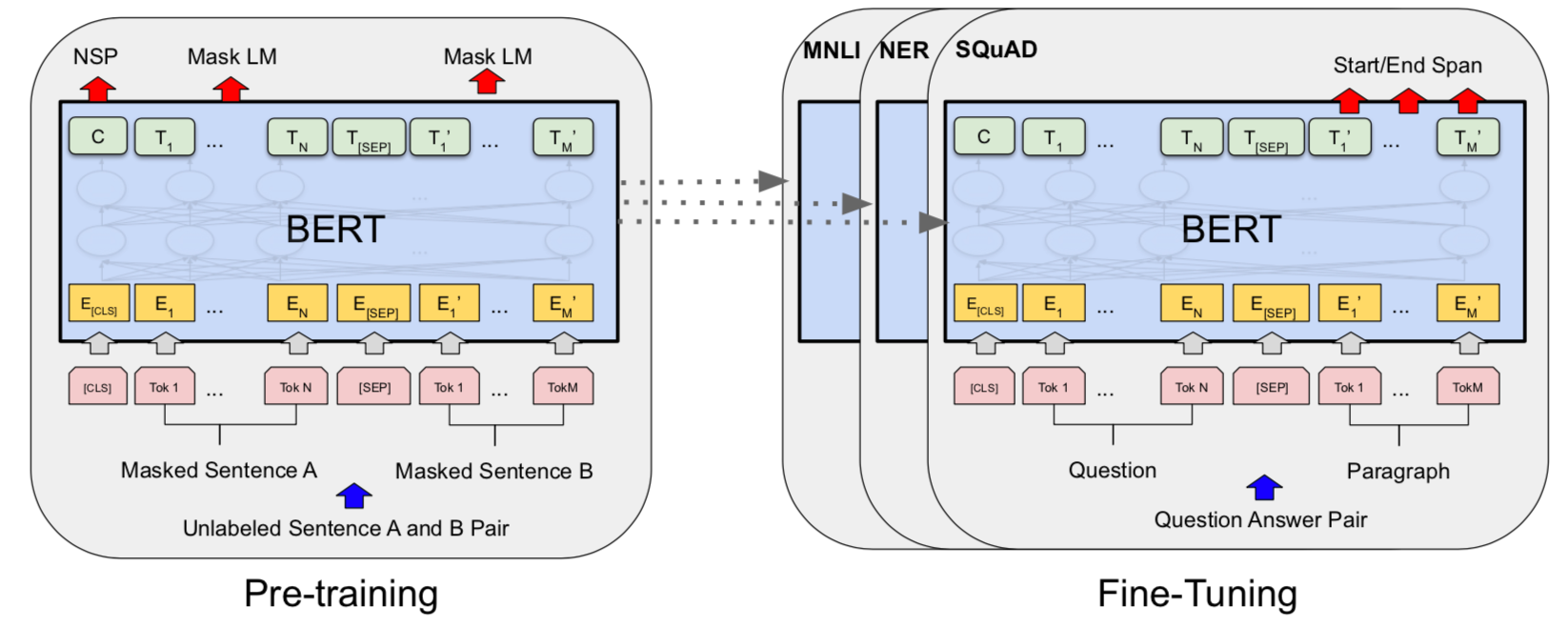

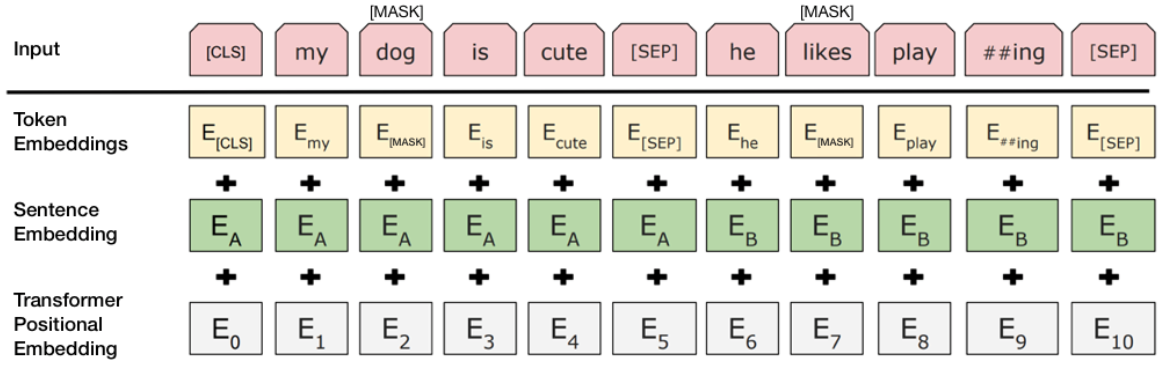

10 Things You Need to Know About BERT and the Transformer Architecture That Are Reshaping the AI Landscape

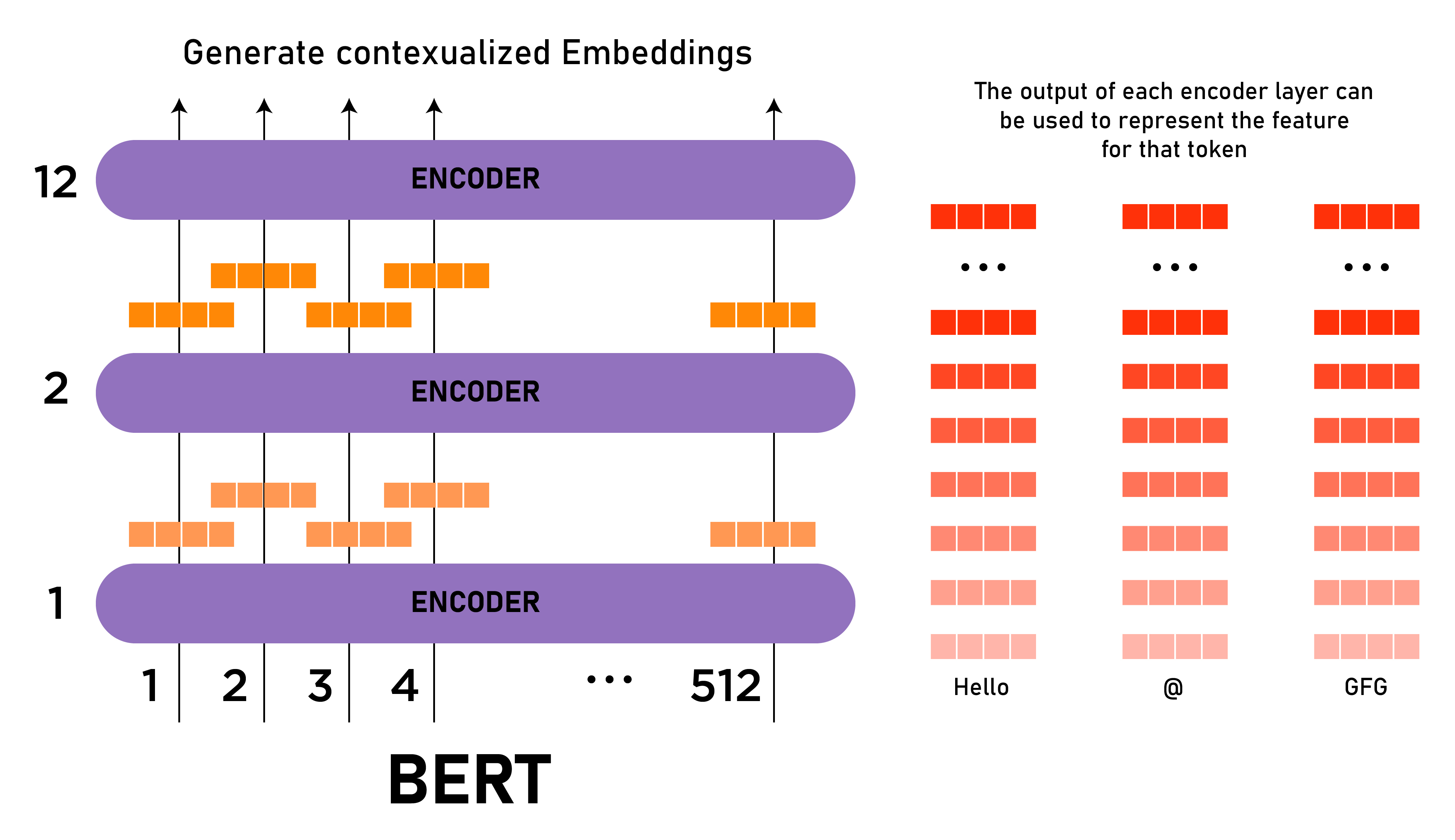

The Transformer based BERT base architecture with twelve encoder blocks. | Download Scientific Diagram

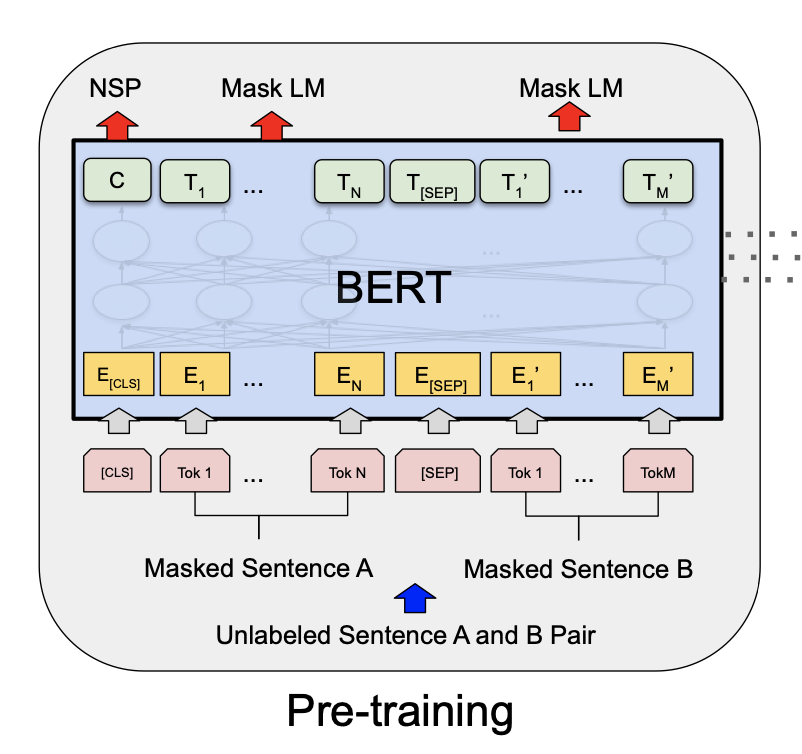

![PDF] FBERT: A Neural Transformer for Identifying Offensive Content | Semantic Scholar PDF] FBERT: A Neural Transformer for Identifying Offensive Content | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/bbcea6eb922b0736cc9e9acc649c8d90cbc8264c/3-Figure1-1.png)