Transformer-XL Explained: Combining Transformers and RNNs into a State-of-the-art Language Model | by Rani Horev | Towards Data Science

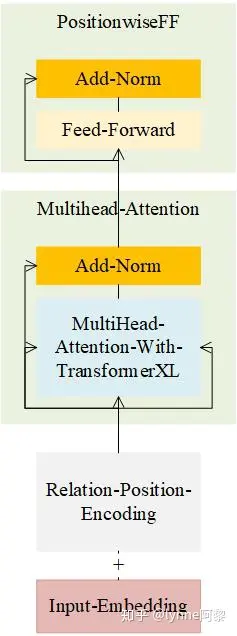

Transformer-XL model architecture showing the early and late MLM fusion... | Download Scientific Diagram

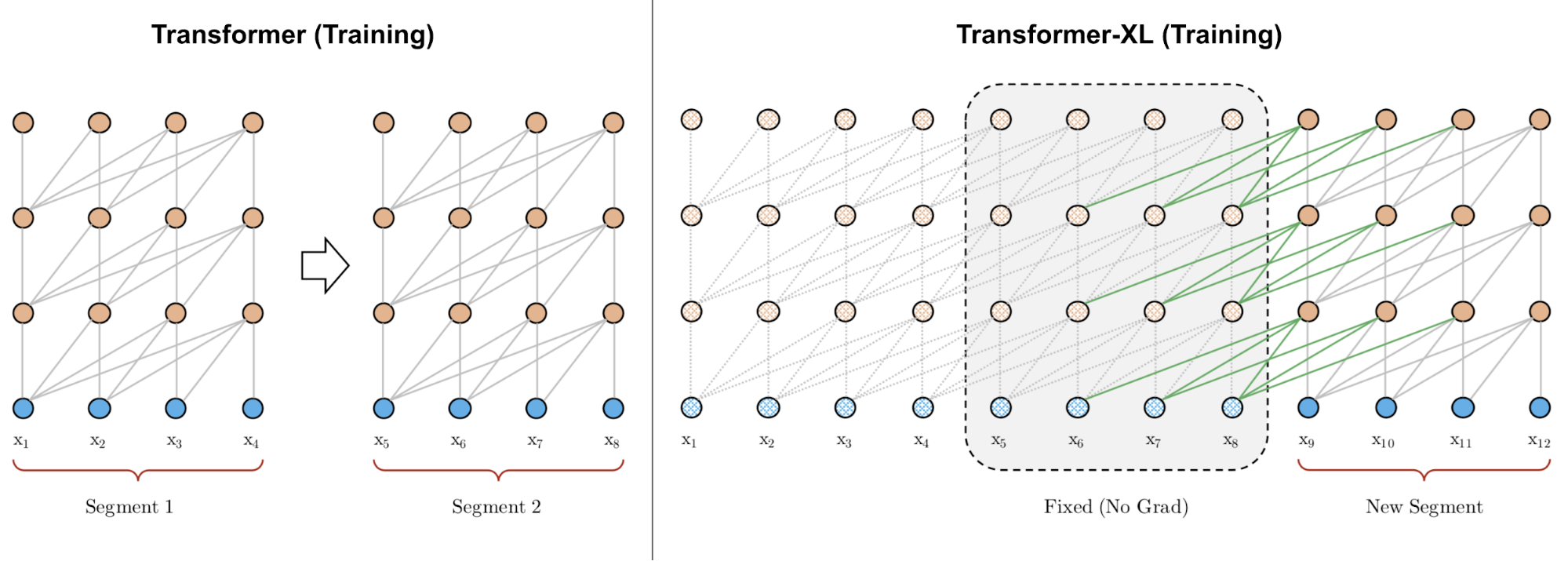

Transformer-XL: Going Beyond Fixed-Length Contexts | by Rohan Jagtap | Artificial Intelligence in Plain English

AK on Twitter: "Transformer-XL Based Music Generation with Multiple Sequences of Time-valued Notes pdf: https://t.co/xTrQBOTspz abs: https://t.co/GiCuFyyVOc https://t.co/k8fVWqGmku" / Twitter

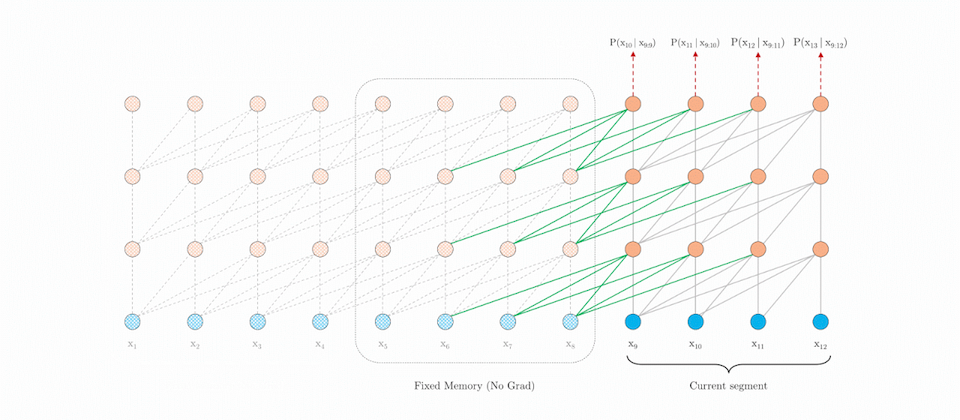

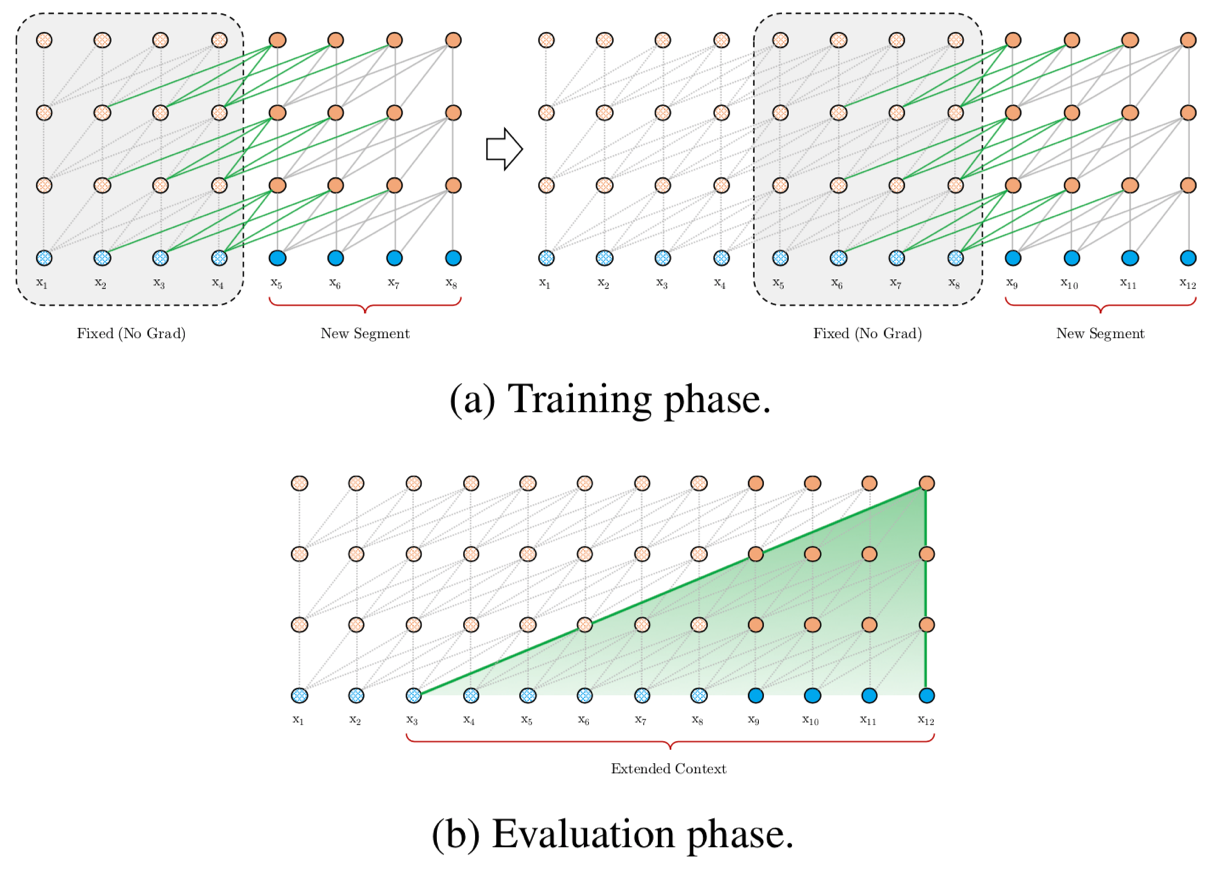

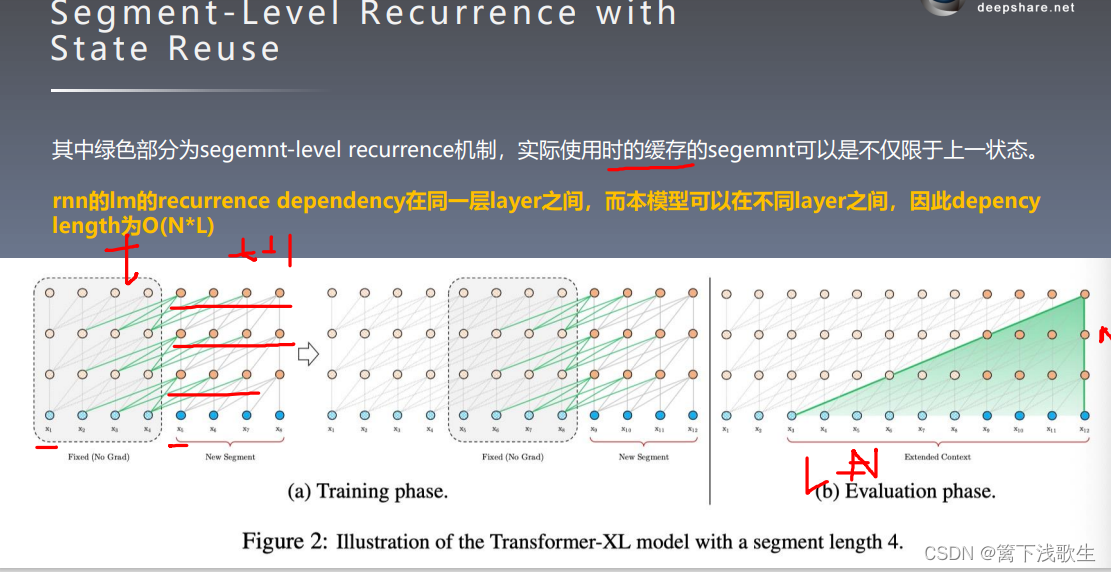

deep learning - What are the hidden states in the Transformer-XL? Also, how does the recurrence wiring look like? - Data Science Stack Exchange

deep learning - What are the hidden states in the Transformer-XL? Also, how does the recurrence wiring look like? - Data Science Stack Exchange

The Land Of Galaxy: 논문 설명 - Transformer-XL : Attentive Language Models Beyond a Fixed-Length Context